SIFEL Home page

SIFEL Home page

SIFEL - SImple Finite ELements

Examples of Solved Problems Based on Homogenization

List of selected real engineering problems solved with help of SIFEL package follows.Mechanical and transport problems based on multi-level homogenization in combination with parallel computing. Examples solved in the scope of two projects supported by the Czech Science Foundation - project No. 18-24867S, Multi-scale modelling of mechanical properties of heterogeneous material and structures on PC clusters, and project No. 15-17615S, Parallel computing for multi-scale modelling of heterogeneous materials and structures.

Parallel 2D linear statics - benchmark of hybrid parallel homogenization with linear elastic materials

The 2D hybrid parallel mechanical homogenization benchmark is used to verify the code. Mainly, it is the MPI communication and data transfer between processors. At the macro level, the model consists of two finite quadrilateral elements loaded by a static load.

These two elements are connected with two simple RVEs at the meso level, which are discretized by four quadrilateral elements.

The plane stress problem is solved on three processors. MPI library is necessary for program compilation.

Hot to run computations in Linux:

Download source files from this link Version of HPMEFEL. Then unpack. In the directory HPARAL/SRC,

build binary files by "make". The binary file is located in the directory "../BIN/HPARAL/SRC/_DBG".

In case of compilation with optimization "make opt", the binary file is located in "../BIN/HPARAL/SRC/_OPT".

Download input files and unpack into directory HPARAL.

Copy the binary file hpmefel into this directory.

The configuration file mpd.hosts is needed with the "HOSTNAMES" of machines, where the program will run.

To run the benchmark type "mpiexec -n 3 ./hpmefel hom_mech".

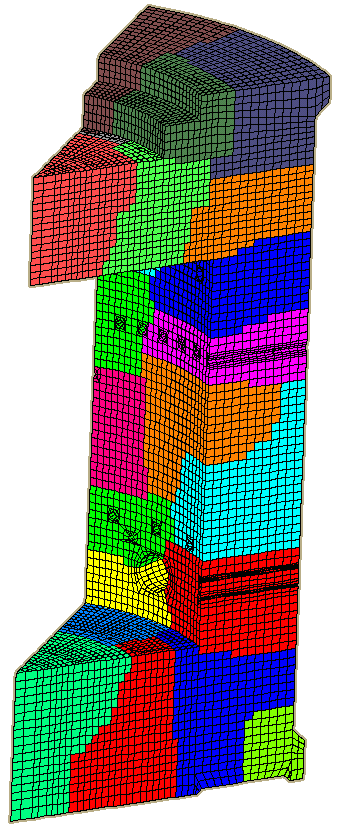

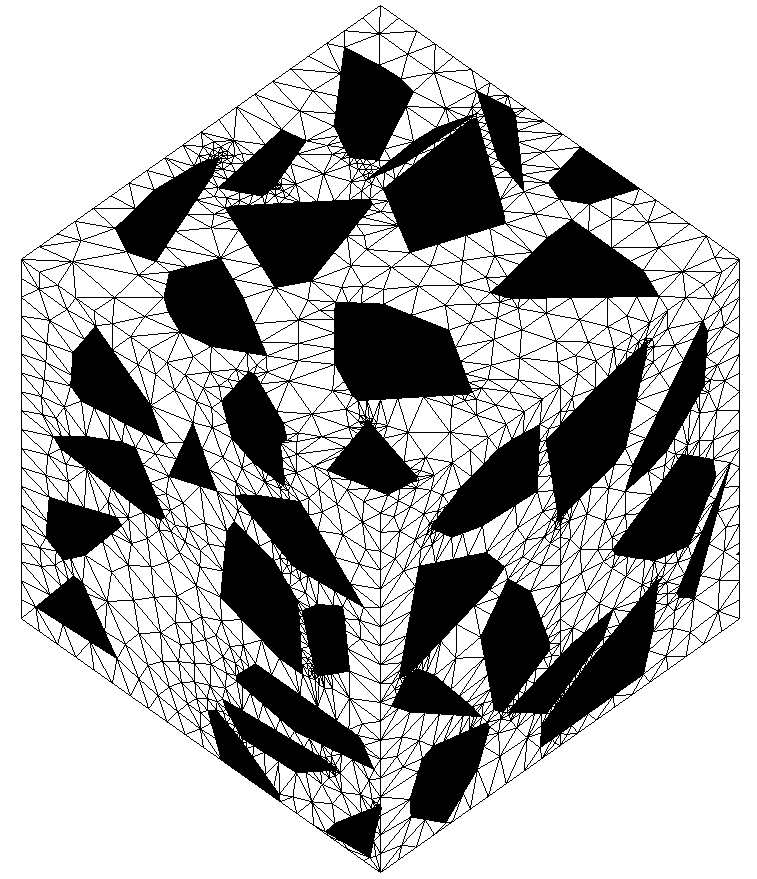

Parallel 3D linear statics - benchmark of hybrid parallel homogenization with linear elastic materials

The 3D hybrid parallel mechanical homogenization benchmark is also used to verify the code.

Mainly, it is the MPI communication and data transfer between processors.

At the macro level, the model consists of four finite hexahedral elements loaded by a static load.

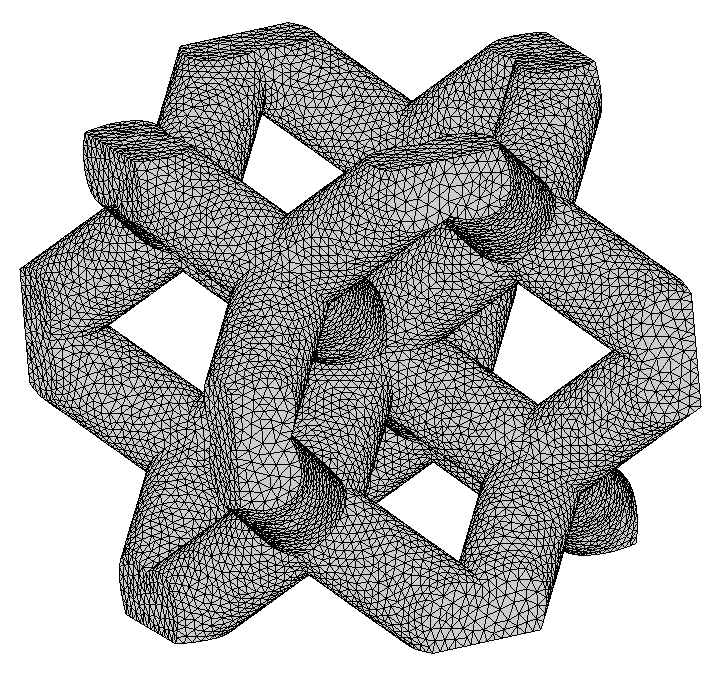

These elements are connected with four complicated RVEs at the meso level, discretized by 98266 tetrahedral elements and 18798 nodes.

The space stress problem is solved on five processors, and the MPI library is necessary for program compilation.

Hot to run computations in Linux:

Download source files from this link Version of HPMEFEL. Then unpack. In the directory HPARAL/SRC,

build binary files by "make". The binary file is located in the directory "../BIN/HPARAL/SRC/_DBG".

In case of compilation with optimization "make opt", the binary file is located in "../BIN/HPARAL/SRC/_OPT".

Download input files and unpack into directory HPARAL.

Copy the binary file hpmefel into this directory.

The configuration file mpd.hosts is needed with the "HOSTNAMES" of machines, where the program will run.

To run the benchmark type "mpiexec -n 5 ./hpmefel hom_masonry_3D_".

Parallel 2D heat transfer - benchmark of hybrid parallel homogenization of nonstationary heat transfer

The 2D hybrid parallel heat transfer homogenization benchmark is also used to verify the code.

The model consists of 24 quadrilateral elements loaded by boundary conditions of prescribed temperatures at the macro level.

These elements are connected with only two simple RVEs at the meso level, which are also discretized by 24 quadrilateral elements.

The 2D nonstationary problem is solved on three processors because the elements at the macro level are aggregated into two domains

with homogenized material properties. MPI library is necessary for program compilation.

Hot to run computations in Linux:

Download source files from this link Version of HPTRFEL. Then unpack. In the directory HPARAL/SRC,

build binary files by "make". The binary file is located in the directory "../BIN/HPARAL/SRC/_DBG".

In case of compilation with optimization "make opt", the binary file is located in "../BIN/HPARAL/SRC/_OPT".

Download input files and unpack into directory HPARAL.

Copy the binary file hptrfel into this directory.

The configuration file mpd.hosts is needed with the "HOSTNAMES" of machines, where the program will run.

To run the benchmark type "mpiexec -n 3 ./hptrfel hom_trans".

Parallel 2D coupled thermo-mechanical analysis - benchmark of hybrid parallel homogenization of coupled problem

This 2D hybrid parallel coupled homogenization benchmark solves a simple thermo-mechanical problem with a staggered algorithm.

At the macro level, the model consists of two quadrilateral elements loaded by a constant load in the mechanical part and simple boundary conditions of prescribed temperatures in the transport part.

Elements are connected with only two simple RVEs at the meso level in the mechanical and transport part, respectively.

The 2D time-dependent problem is solved on three processors.

Processor No. 1 solves the coupled problem, and processors No. 2 and No. 3 solve homogenization problems for mechanical and transport parts.

Homogenized material properties - effective stiffness, conductivity, and capacity matrices are transferred from the meso level to the macro level.

Hot to run computations in Linux:

Download source files from this link Version of HPMETR. Then unpack. In the directory HPARAL/SRC,

build binary files by "make". The binary file is located in the directory "../BIN/HPARAL/SRC/_DBG".

In case of compilation with optimization "make opt", the binary file is located in "../BIN/HPARAL/SRC/_OPT".

Download input files and unpack into directory HPARAL.

Copy the binary file hpmetr into this directory.

The configuration file mpd.hosts is needed with the "HOSTNAMES" of machines, where the program will run.

To run the benchmark type "mpiexec -n 3 ./hpmetr hom_coup".

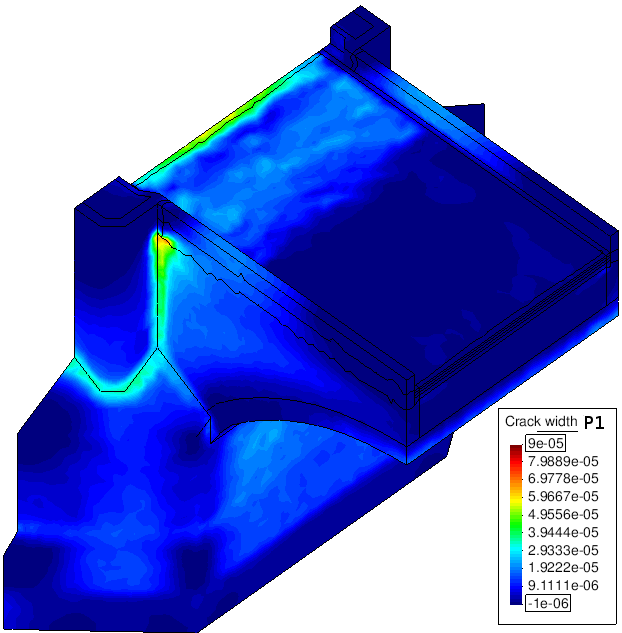

Parallel 3D Hygro-thermo-mechanical analysis of Charles bridge in Prague

The numerical model simulates the coupled heat and moisture transfer and mechanical response of one half of arch III in the course of two years, 2011 - 2012. The finite element mesh was created using tetrahedron elements with linear approximation functions. It has 73749 nodes and 387773 elements. The analyzed segment taken out of Charles Bridge was split into 12 sub-domains. The average number of nodes and elements on one sub-domain is 7000 and 32000, respectively. Parallel computation was performed on a heterogeneous PC cluster where computers are based on the 32 bit Intel E6850 processors with a different frequency in the range of 2.4 to 3 GHz and the memory from 3GB to 3.3 GB. The parallel algorithm performed 7596 time steps. A time step was set to cover two hours (7200 seconds). The overall consumption of the computation (CPU) time was one month.

- Related papers:paper.pdf

- Results gallery

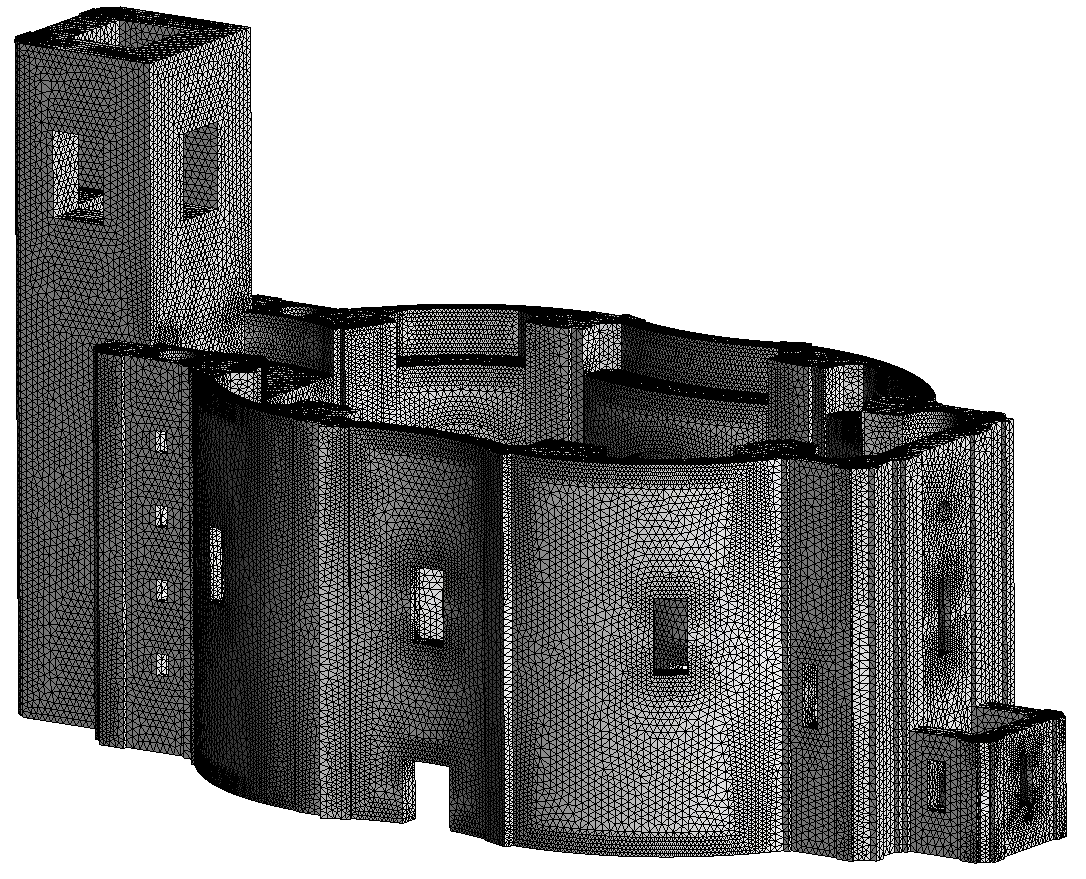

Parallel 3D Thermo-mechanical analysis of St. Ann Church

The numerical model simulates the coupled heat and moisture transfer and mechanical response of St. Ann Church in the Broumov group of churches influenced by climatic conditions. Presentation in progress.

Homogenization of trabecular and gyroid structures for dental and bone implants

The computer analysis of effective mechanical properties of trabecular and gyroid structures for dental and bone implants based on the two-level approach. Presentation in progress.

- Related papers:paper.pdf

- Results gallery

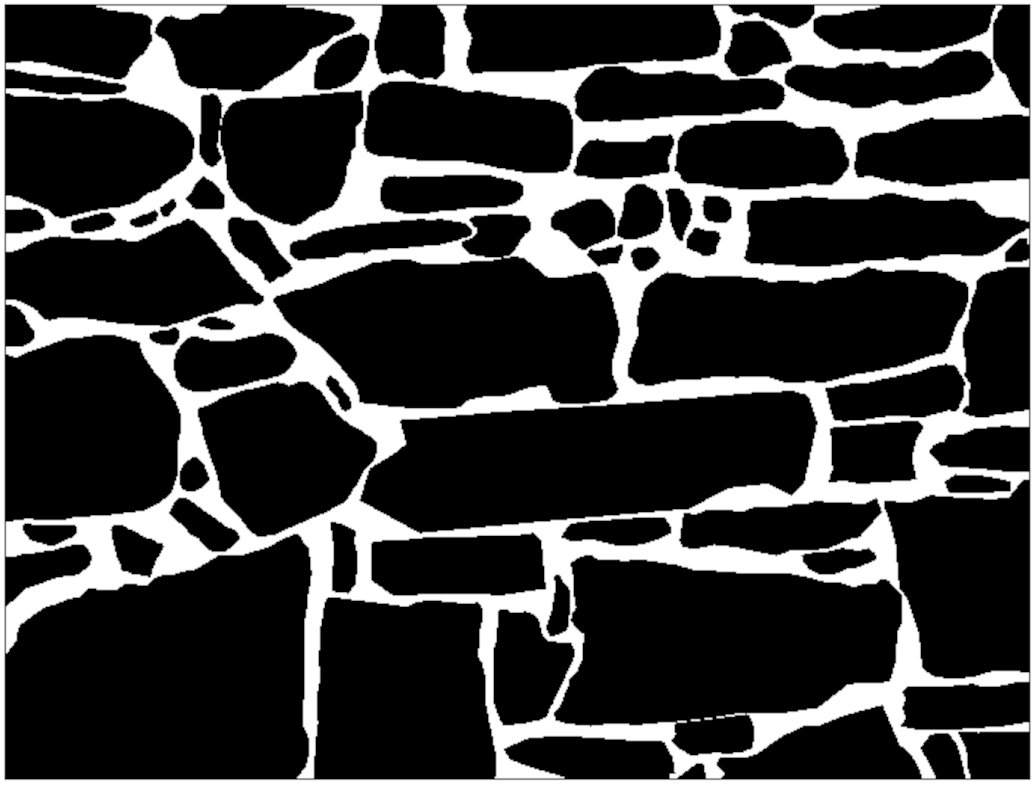

Homogenization of mechanical properties of masonry structures

The computer analysis of effective mechanical properties masonry structures based on the two-level approach. Presentation in progress.

- Related papers:paper.pdf

- Results gallery